In this tutorial, we build an advanced Agentic Retrieval-Augmented Generation (RAG) system that goes beyond simple question answering. We design it to intelligently route queries to the right knowledge sources, perform self-checks to assess answer quality, and iteratively refine responses for improved accuracy. We implement the entire system using open-source tools like FAISS, SentenceTransformers, and Flan-T5. As we progress, we explore how routing, retrieval, generation, and self-evaluation combine to form a decision-tree-style RAG pipeline that mimics real-world agentic reasoning. Check out the FULL CODES here.

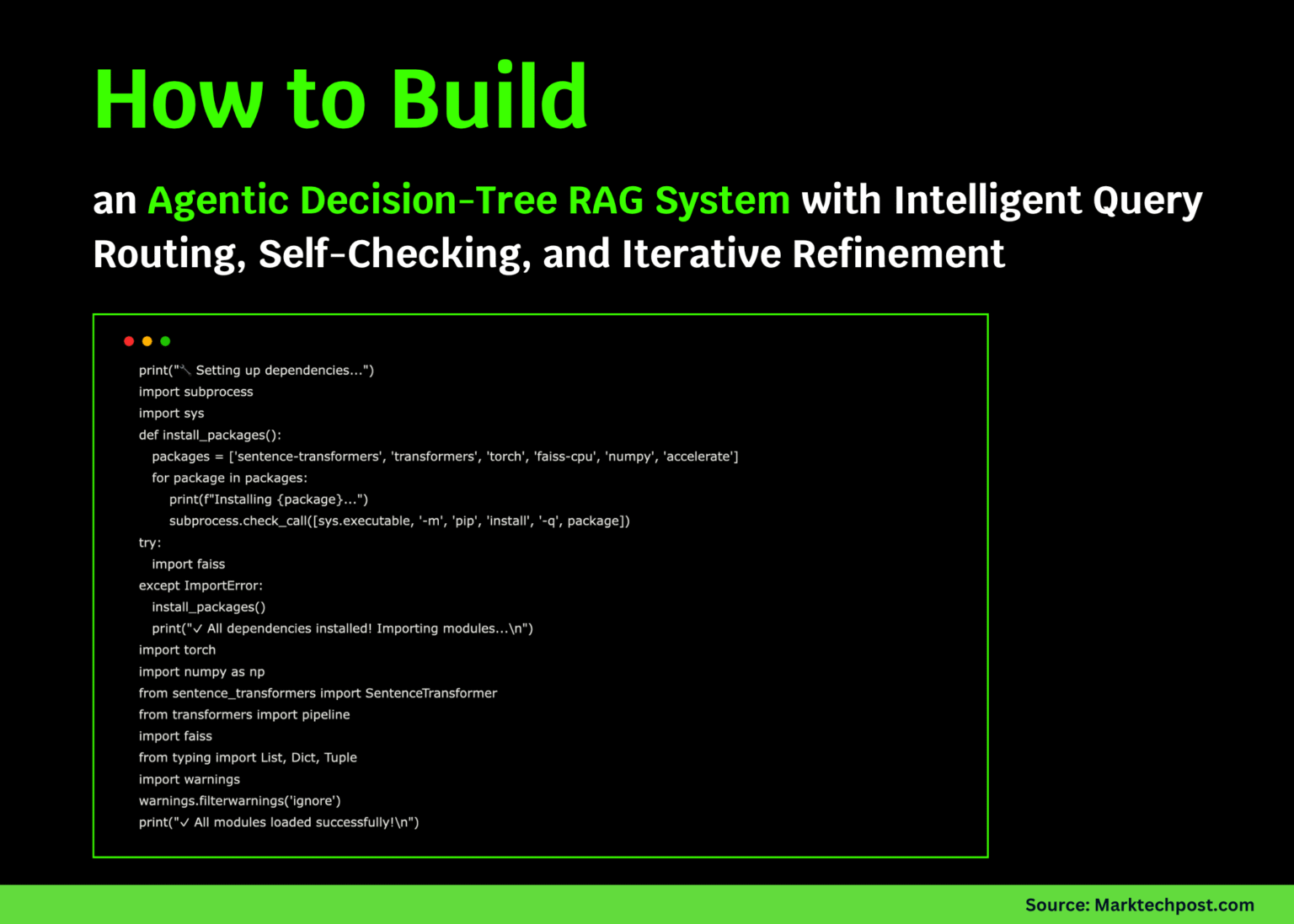

import subprocess

import sys

def install_packages():

packages = [‘sentence-transformers’, ‘transformers’, ‘torch’, ‘faiss-cpu’, ‘numpy’, ‘accelerate’]

for package in packages:

print(f”Installing {package}…”)

subprocess.check_call([sys.executable, ‘-m’, ‘pip’, ‘install’, ‘-q’, package])

try:

import faiss

except ImportError:

install_packages()

print(“✓ All dependencies installed! Importing modules…\n”)

import torch

import numpy as np

from sentence_transformers import SentenceTransformer

from transformers import pipeline

import faiss

from typing import List, Dict, Tuple

import warnings

warnings.filterwarnings(‘ignore’)

print(“✓ All modules loaded successfully!\n”)

We begin by installing all necessary dependencies, including Transformers, FAISS, and SentenceTransformers, to ensure smooth local execution. We verify installations and install essential modules such as NumPy, PyTorch, and FAISS for embedding, retrieval, and generation. We confirm that all libraries load successfully before moving ahead with the main pipeline. Check out the FULL CODES here.

def __init__(self, embedding_model=”all-MiniLM-L6-v2″):

print(f”Loading embedding model: {embedding_model}…”)

self.embedder = SentenceTransformer(embedding_model)

self.documents = []

self.index = None

def add_documents(self, docs: List[str], sources: List[str]):

self.documents = [{“text”: doc, “source”: src} for doc, src in zip(docs, sources)]

embeddings = self.embedder.encode(docs, show_progress_bar=False)

dimension = embeddings.shape[1]

self.index = faiss.IndexFlatL2(dimension)

self.index.add(embeddings.astype(‘float32’))

print(f”✓ Indexed {len(docs)} documents\n”)

def search(self, query: str, k: int = 3) -> List[Dict]:

query_vec = self.embedder.encode([query]).astype(‘float32’)

distances, indices = self.index.search(query_vec, k)

return [self.documents[i] for i in indices[0]]

We design the VectorStore class to store and retrieve documents efficiently using FAISS-based similarity search. We embed each document using a transformer model and build an index for fast retrieval. This allows us to quickly fetch the most relevant context for any incoming query. Check out the FULL CODES here.

def __init__(self):

self.categories = {

‘technical’: [‘how’, ‘implement’, ‘code’, ‘function’, ‘algorithm’, ‘debug’],

‘factual’: [‘what’, ‘who’, ‘when’, ‘where’, ‘define’, ‘explain’],

‘comparative’: [‘compare’, ‘difference’, ‘versus’, ‘vs’, ‘better’, ‘which’],

‘procedural’: [‘steps’, ‘process’, ‘guide’, ‘tutorial’, ‘how to’]

}

def route(self, query: str) -> str:

query_lower = query.lower()

scores = {}

for category, keywords in self.categories.items():

score = sum(1 for kw in keywords if kw in query_lower)

scoresAgentic AI = score

best_category = max(scores, key=scores.get)

return best_category if scores[best_category] > 0 else ‘factual’

We introduce the QueryRouter class to classify queries by intent, technical, factual, comparative, or procedural. We use keyword matching to determine which category best fits the input question. This routing step ensures that the retrieval strategy adapts dynamically to different query styles. Check out the FULL CODES here.

def __init__(self, model_name=”google/flan-t5-base”):

print(f”Loading generation model: {model_name}…”)

self.generator = pipeline(‘text2text-generation’, model=model_name, device=0 if torch.cuda.is_available() else -1, max_length=256)

device_type = “GPU” if torch.cuda.is_available() else “CPU”

print(f”✓ Generator ready (using {device_type})\n”)

def generate(self, query: str, context: List[Dict], query_type: str) -> str:

context_text = “\n\n”.join([f”[{doc[‘source’]}]: {doc[‘text’]}” for doc in context])

Context:

{context_text}

Question: {query}

Answer:”””

answer = self.generator(prompt, max_length=200, do_sample=False)[0][‘generated_text’]

return answer.strip()

def self_check(self, query: str, answer: str, context: List[Dict]) -> Tuple[bool, str]:

if len(answer) < 10:

return False, “Answer too short – needs more detail”

context_keywords = set()

for doc in context:

context_keywords.update(doc[‘text’].lower().split()[:20])

answer_words = set(answer.lower().split())

overlap = len(context_keywords.intersection(answer_words))

if overlap < 2:

return False, “Answer not grounded in context – needs more evidence”

query_keywords = set(query.lower().split())

if len(query_keywords.intersection(answer_words)) < 1:

return False, “Answer doesn’t address the query – rephrase needed”

return True, “Answer quality acceptable”

We built the AnswerGenerator class to handle answer creation and self-evaluation. Using the Flan-T5 model, we generate text responses grounded in retrieved documents. Then, we perform a self-check to assess the length of the answer, context grounding, and relevance, ensuring our output is meaningful and accurate. Check out the FULL CODES here.

def __init__(self):

self.vector_store = VectorStore()

self.router = QueryRouter()

self.generator = AnswerGenerator()

self.max_iterations = 2

def add_knowledge(self, documents: List[str], sources: List[str]):

self.vector_store.add_documents(documents, sources)

def query(self, question: str, verbose: bool = True) -> Dict:

if verbose:

print(f”\n{‘=’*60}”)

print(f”🤔 Query: {question}”)

print(f”{‘=’*60}”)

query_type = self.router.route(question)

if verbose:

print(f”📍 Route: {query_type.upper()} query detected”)

k_docs = {‘technical’: 2, ‘comparative’: 4, ‘procedural’: 3}.get(query_type, 3)

iteration = 0

answer_accepted = False

while iteration < self.max_iterations and not answer_accepted:

iteration += 1

if verbose:

print(f”\n🔄 Iteration {iteration}”)

context = self.vector_store.search(question, k=k_docs)

if verbose:

print(f”📚 Retrieved {len(context)} documents from sources:”)

for doc in context:

print(f” – {doc[‘source’]}”)

answer = self.generator.generate(question, context, query_type)

if verbose:

print(f”💡 Generated answer: {answer[:100]}…”)

answer_accepted, feedback = self.generator.self_check(question, answer, context)

if verbose:

status = “✓ ACCEPTED” if answer_accepted else “✗ REJECTED”

print(f”🔍 Self-check: {status}”)

print(f” Feedback: {feedback}”)

if not answer_accepted and iteration < self.max_iterations:

question = f”{question} (provide more specific details)”

k_docs += 1

return {‘answer’: answer, ‘query_type’: query_type, ‘iterations’: iteration, ‘accepted’: answer_accepted, ‘sources’: [doc[‘source’] for doc in context]}

We combine all components into the AgenticRAG system, which orchestrates routing, retrieval, generation, and quality checking. The system iteratively refines its answers based on self-evaluation feedback, adjusting the query or expanding context when necessary. This creates a feedback-driven decision-tree RAG that automatically improves performance. Check out the FULL CODES here.

print(“\n” + “=”*60)

print(“🚀 AGENTIC RAG WITH ROUTING & SELF-CHECK”)

print(“=”*60 + “\n”)

documents = [

“RAG (Retrieval-Augmented Generation) combines information retrieval with text generation. It retrieves relevant documents and uses them as context for generating accurate answers.”

]

sources = [“Python Documentation”, “ML Textbook”, “Neural Networks Guide”, “Deep Learning Paper”, “Transformer Architecture”, “RAG Research Paper”]

rag = AgenticRAG()

rag.add_knowledge(documents, sources)

test_queries = [“What is Python?”, “How does machine learning work?”, “Compare neural networks and deep learning”]

for query in test_queries:

result = rag.query(query, verbose=True)

print(f”\n{‘=’*60}”)

print(f”📊 FINAL RESULT:”)

print(f” Answer: {result[‘answer’]}”)

print(f” Query Type: {result[‘query_type’]}”)

print(f” Iterations: {result[‘iterations’]}”)

print(f” Accepted: {result[‘accepted’]}”)

print(f”{‘=’*60}\n”)

if __name__ == “__main__”:

main()

We finalize the demo by loading a small knowledge base and running test queries through the Agentic RAG pipeline. We observe how the model routes, retrieves, and refines answers step by step, printing intermediate results for transparency. By the end, we confirm that our system successfully delivers accurate, self-validated answers using only local computation.

In conclusion, we create a fully functional Agentic RAG framework that autonomously retrieves, reasons, and refines its answers. We witness how the system dynamically routes different query types, evaluates its own responses, and improves them through iterative feedback, all within a lightweight, local environment. Through this exercise, we deepen our understanding of RAG architectures and also experience how agentic components can transform static retrieval systems into self-improving intelligent agents.

Check out the FULL CODES here. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.